Recently Unity introduced a plugin called ML-Agents, which is meant to integrate Machine Learning into Unity’s environment. There were lots of ways to implement Machine Learning in Unity before, but it was horrifically complicated. With introduction of ML-Agents, it has finally become significantly easier to implement Machine Learning into your project, even if you don’t have a PhD in Deep Learning 😊 Now developers can use external Python libraries such as Tensorflow and Keras which are the most popular tools for Deep Learning.

In this blogpost we’ll look at what we can do with ML-Agents that we previously couldn’t and compare it with other ways of dealing with the tasks without Deep Learning. Here, we’ll make a comparison on the task of continuous control.

Continuous control is the task which can be simply represented with this example and this example. Creatures are teaching themselves how to walk from scratch in a simulated environment. Looks awesome, doesn’t it?

Alright, in this blogpost, we’ll design the creature on the scene and implement the AI using two algorithms. AI will teach itself how to walk from scratch without any help. The first algorithm will be implemented using ML-Agents, and the second using Evolutionary Computation.

Quick Overview / Theory

ML-Agents

Machine Learning (ML) is a very broad topic and starting from the very beginning goes way beyond the scope of the post, so we’ll just focus on techniques and algorithms ML-Agents use. ML-Agents plugin allows you to train intelligent agents to serve different tasks using neural networks. As the official documentation states, this can be done using Reinforcement Learning (RL), imitation learning, neuroevolution (NE) and other techniques you can write using Python API. Unfortunately, at the time of writing this blogpost, NE is unavailable within ML-Agents, so the most suitable for our task is RL. RL also can be done using numerous number of algorithms, but by default ML-Agents uses an algorithm called Proximal Policy Optimization (PPO). This is an algorithm developed by OpenAI and it has become a default RL algorithm there. You can read more about it here.

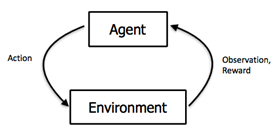

The idea behind RL is very simple. The Agent you’re training doesn’t have any training data. Everything it learns, it learns from its own experience through trial-and-error with the goal of maximizing long-term reward.

An Agent should be given an advice in a form of reward whether an action was good or bad. Then, when training starts, agent takes action. Based on this action, system rewards or punishes the Agent tweaking the neural network to fit the environment better. Neural Net is responsible for decision making aka behaviour.

That’s pretty much it. In ML-Agents, you don’t have to code your neural network from scratch (unless you want some custom solution). Just tell the Agent what parameters it should take as an input, assign the outputs, rewards and that’s it. The plugin will do the hard lifting for you.

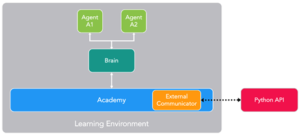

To setup the learning environment in ML-Agents, you only have to setup 3 components. These are Agents, Brains and Academy:

- Agent – can be any character in the scene. Agent generates observations, takes actions and receives the rewards. In our task it’s going to be the creature.

- Brain – a component which determines the Agent’s behavior – it’s a component that receives the observations and rewards from the Agent and returns the action.

- Academy – a component that manages the environment-wide parameters and communicates to the Python API.

Evolutionary Computation

In case of Evolutionary Computation (EC), you don’t have any plugins, no systems that could help you massively. You’d have to implement it yourself. You might ask, why did you choose EC, then? Well, in terms of implementation, EC is fairly easy, and well suited for continuous control task to a certain degree. Also, EC method is well described in the series of blogposts written by Alan Zucconi. He also shows it on the example of teaching creatures to walk on their own.

So, I’m not going to explain the whole logic behind the EC here. It’s much better for you to take a look at Alan’s blogposts. Here’s a link, once again 😊

In short, EC is an algorithm, which mimics natural selection. You definitely have heard of Darwin’s concept of survival of the fittest. That is, the weakest (least well adapted) members of a species die off and the strongest survive. Over many generations, the species will evolve to become better adapted to its environment. This concept can be also used in a computer program. It might not find the best solution, but same as a neural network, EC is used to approximate the solution.

In evolutionary computation, we create a population of potential solutions to a problem. These are often random solutions, so they are unlikely to solve the problem being tackled or even come close. But some will be slightly better than others. The computer can discard the worst solutions, retain the better ones and use them to “breed” more potential solutions. Parts of different solutions will be combined (this is called “crossover”) to create a new generation of solutions that can then be tested, and the process begins again. One of the most important parts of the algorithm is mutation. A small percent of random parts of the solution is adjusted or changed completely to create a new combination.

EC is a very broad term that includes many different algorithms and techniques. In this example we’ll use one technique called Evolutionary Programming. The idea behind it is having fixed algorithm which parameters are subject to optimization.

Alright, that’s it with a quick overview of algorithms. In the next part we’ll take a look at the implementation details..