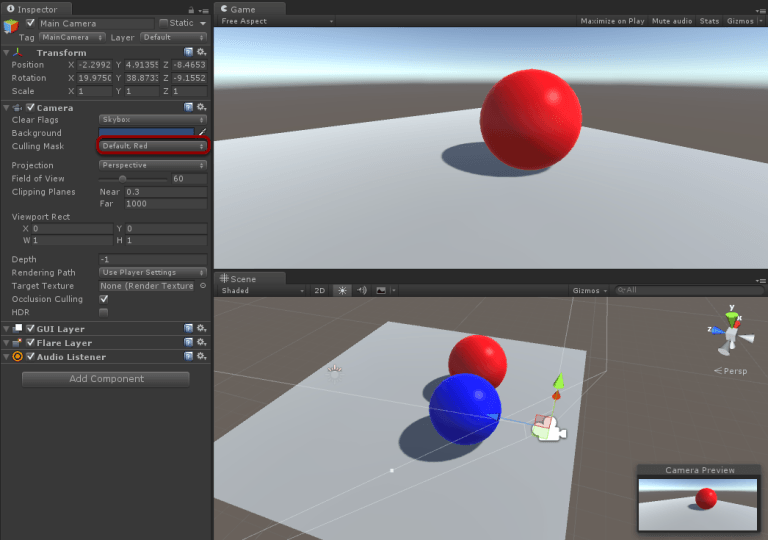

Have you ever wondered how much time does it take to apply snow to all of the textures in your game? Probably a lot of times. We’d like to show you how to create an Image Effect (screen-space shader) that will immediately change the season of your scene in Unity. How does it work? In the images above you […]

Let It Snow! How To Make a Fast Screen-Space Snow Accumulation Shader In Unity